Registries. They’re a big thing in interventional radiology. Go to a conference and you’ll see multiple presentations describing a new device or technique as ‘safe and effective’ on the basis of ‘analysis of prospectively collected data’. National organisations (eg. the Healthcare Quality Improvement Partnership [HQIP] and the National Institute for Health and Care Excellence), professional societies (like the British Society for Interventional Radiology) and the medical device industry promote them, often enthusiastically.

The IDEAL collaboration is an organisation dedicated to quality improvement in research into surgery, interventional procedures and devices. It has recently updated its comprehensive framework for the evaluation of surgical and device based therapeutic interventions. The value of comprehensive data collection within registries is emphasised in this framework at all stages of development, from translational research to post-market surveillance.

Baroness Cumberledge’s report into failures in long term monitoring of new devices, techniques and drugs identified a lack of vigilant long-term monitoring as contributing to a system that is not safe enough for those being treated using these innovations. She recommended that a central database be created for implanted devices for research and audit into their long term outcomes.

This is all eminently sensible. Registries, when properly designed and funded and with a clear purpose and goal are powerful tools in generating information about the interventions we perform. But I feel very uneasy about many registries because they often have unclear purpose, are poorly designed and are inadequately funded. At best they create data without information. At worst they cause harm by obscuring reality or suppressing more appropriate forms of assessment.

A clear understanding of the purpose of a registry is crucial to its design. Registries work best as tools to assess safety. In a crowded and expensive healthcare economy, this is an insufficient metric by which to judge a new procedure or device. Evidence of effectiveness relative to alternatives is crucial. If the purpose of a registry is to make some assessment of effectiveness, its design needs to reflect this.

The gold standard tool for assessing effectiveness is the randomised controlled trial [RCT]. These are expensive, time-consuming, and complex to set up and coordinate. As an alternative, a registry recruiting on the basis of a specific diagnosis (equivalent to RCT inclusion criteria) is ethically simpler and frequently cheaper to instigate. While still subject to selection bias, a registry recruiting on this basis can provide data on the relative effectiveness of the various interventions (or no intervention) offered to patients with that diagnosis. The registry data supports shared decision making by providing at least some data about all the options available.

Unfortunately, most current UK and international interventional registries use the undertaking of the intervention (rather than the patient’s diagnosis) as the criterion for entry. The lack of data collection about patients who are in some way unsuitable for the intervention or opt for an alternative (such as conservative management) introduces insurmountable inclusion bias and prevents the reporting of effectiveness and cost-effectiveness compared with alternatives. The alternatives are simply ignored (or assumed to be inferior) and safety is blithely equated with effectiveness without justification or explanation. Such registries are philosophically anchored to the interests of the clinician (interested in the intervention) rather than to those of the patient (with an interest in their disease). They are useless for shared decision making.

This philosophical anchoring is also evident in choices about registry outcome measures which are frequently those most easy to collect rather than those which matter most to patients: an perfect example of the McNamara (quantitative) fallacy. How often are patients involved in registry design at the outset? How often are outcome metrics relevant to them included, rather than surrogate endpoints of importance to clinicians and device manufacturers?

Even registries where the ambition is limited to post-intervention safety assessment or outcome prediction, and where appropriate endpoints are chosen, are frequently limited by methodological flaws. Lack of adequate statistical planning at the outset and collection of multiple baseline variables without consideration of the number of outcome events needed to allow modelling, risks overfitting and shrinkage – fundamental statistical errors.

Systematic inclusion of ‘all comers’ is rare, but failure to include all patients undergoing a procedure introduces ascertainment bias. Global registries often recruit apparently impressive numbers of patients, but scratch the surface and you find rates of recruitment that suggest a majority of patients were excluded. Why? Why include one intervention or patient but not another? Such recruitment problems also affect RCTs, resulting in criticisms about ‘generalisability’ or real world relevance, but its uncommon to see such criticism levelled at registry data, especially when it supports pre-existing beliefs, procedural enthusiasm, or endorses a product marketing agenda.

Finally there is the issue of funding. Whether the burden of funding and transacting post market surveillance should fall primarily onto professional bodies, the government or on the medical device companies that profit from the sale of their products is a subject for legitimate debate but in the meantime, registry funding rarely includes the provision for systematic longitudinal collation of long-term outcome data from all registrants. Pressured clinicians and nursing staff cannot prioritise data collection absent the time or funding to do this. Rather the assumption is (for example) that absence of notification of adverse outcome automatically represents a positive. Registry long term outcome data is therefore frequently inadequate. While potential solutions such as linkages to routinely collected datasets and other ‘big data’ initiatives are attractive, these data are often generic and rarely patient focussed. The information governance and privacy obstacles to linkages of this sensitive information are substantial.

Where does this depressing analysis leave us?

Innovative modern trial methodologies (such as cluster, preference, stepped wedge, trial-within-cohort or adaptive trials) provide affordable, robust, pragmatic and scalable alternative options for the evaluation of novel interventions and are deliverable within an NHS environment, though registries are still likely to have an important role to play. HQIP’s ‘Proposal for a Medical Device Registry’ defines key principles for registry development including patient and clinician inclusivity and ease of routine data collection using electronic systems. When these principles are adhered to, where registries are conceived and designed around a predefined specific hypothesis or purpose, where they are based on appropriate statistical methodology with relevant outcome measures, are coordinated by staff with the necessary skillsets to manage site, funding and regulatory aspects and are budgeted to ensure successful data collection and analysis, then they can be powerful sources of information about practice and novel technologies. This is a high bar but is achievable as the use of registry data during the COVID pandemic has highlighted. Much effort is being expended on key national registries (such as the National Vascular Registry) to try to improve the quality and comprehensiveness of the data collected and create links to other datasets.

But where these ambitions are not achieved we must remain highly skeptical about any evidence registry data purports to present. Fundamentally, unclear registry purpose, poor design and inadequate funding will guarantee both garbage in and garbage out.

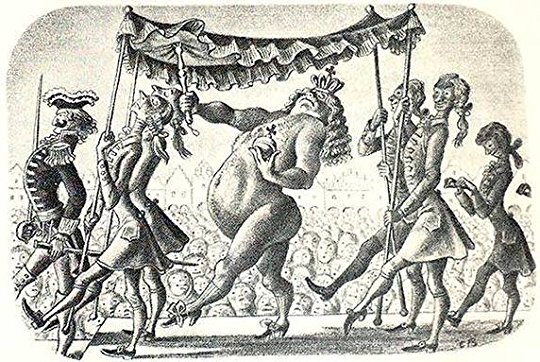

Registry data is everywhere. Like the emperor’s new clothes, is it something you accept at face value, uncritically, because everyone else does? Do you dismiss the implications of registry design if the data interpretation matches your prejudice? Instead perhaps, next time you read a paper reporting registry data or are at a conference listening to a presentation about a ‘single arm trial’, be like the child in the story and puncture the fallacy. Ask whether there is any meaningful information left once the biases inherent in the design are stripped away.